Project objective :

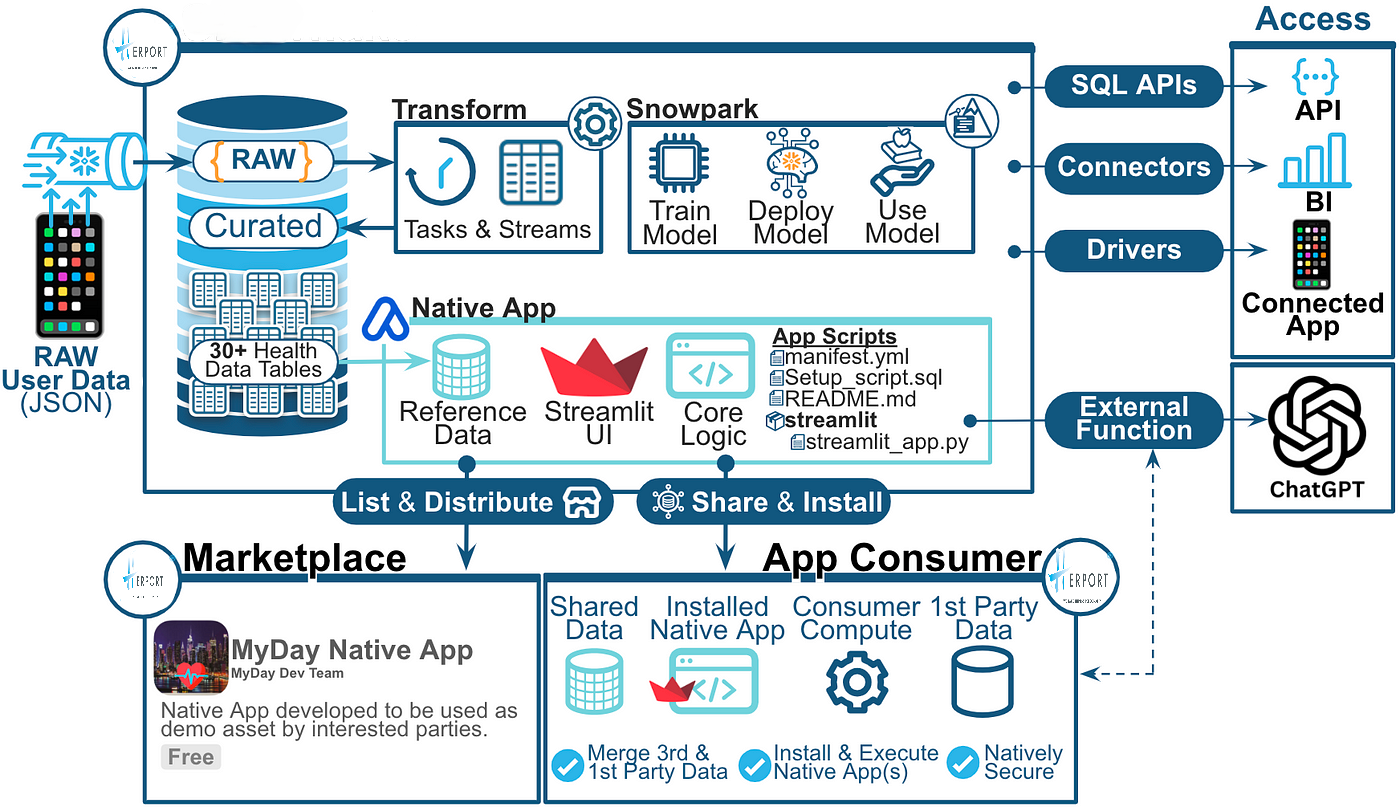

The main objective of this project is to implement an efficient and automated ELT (Extract, Load, Transform) architecture, using Snowflake as the central platform. This solution aims to extract data from different sources, load it into a staging environment, transform it according to business needs, and then provide the transformed data to reporting and data visualisation tools. This process ensures that data is accessible, reliable and ready for analysis in real time or in batch, meeting the needs of businesses for rapid, informed decision-making.

The ultimate goal is to ensure that data from multiple systems (batch sources, real-time feeds, etc.) is properly ingested, cleansed, enriched and made available to end-users in accurate dashboards and reports, while minimising technical complexity and maximising automation.

Plateforms &Technologies :

- Snowflake: The central data platform in this architecture. It enables large-scale data management and storage, and offers advanced transformation capabilities via Snowpark, SnowSQL and other integrated tools.

- Data sources :

- Batch Sources (Databases, file systems) : Data extracted in batch form from relational databases or file systems.

- CDC (Change Data Capture): Captures changes in transactional databases to keep data up to date in real time.

- Kafka (Real-time Streaming): Used to ingest streaming data from distributed systems, primarily for real-time use cases.

- Snowflake Staging Layer :

- External internships: Temporary storage of data in external systems such as S3 or Azure Blob Storage before ingestion into Snowflake.

- Internal internships: Temporary storage of data directly in Snowflake, to facilitate subsequent processing stages.

- Tranformation Technologies :

- Snowpark: Allows you to write complex transformations using languages such as Python, Scala and Java in the Snowflake environment.

- SnowSQL: Command-line interface for executing SQL queries, automating transformation scripts and managing data.

- SQL Scripting: Used for data transformations within Snowflake, optimised for large volumes of data and for creating automated pipelines.

- Data Visualisation and Reporting :

- SnowSight : Snowflake’s integrated dashboard for viewing transformed data and monitoring the status of processing pipelines.

Conclusion :

This architecture illustrates a complete and modern ELT solution, optimised to manage both real-time data flows and batch data uploads. It is based on Snowflake, a scalable cloud platform that offers advanced features for ingesting, storing and transforming data. The ELT approach enables raw data to be maintained in a centralised environment before being transformed and enriched to meet the company’s analytical needs.

Thanks to the automation of data pipelines and the integration of cutting-edge technologies such as Kafka for real-time streaming and Snowflake for data management and transformation, the architecture offers a robust and scalable solution to meet the growing needs of businesses in terms of data processing and analysis.

© Tous droits réservés par GYCLOUDATA